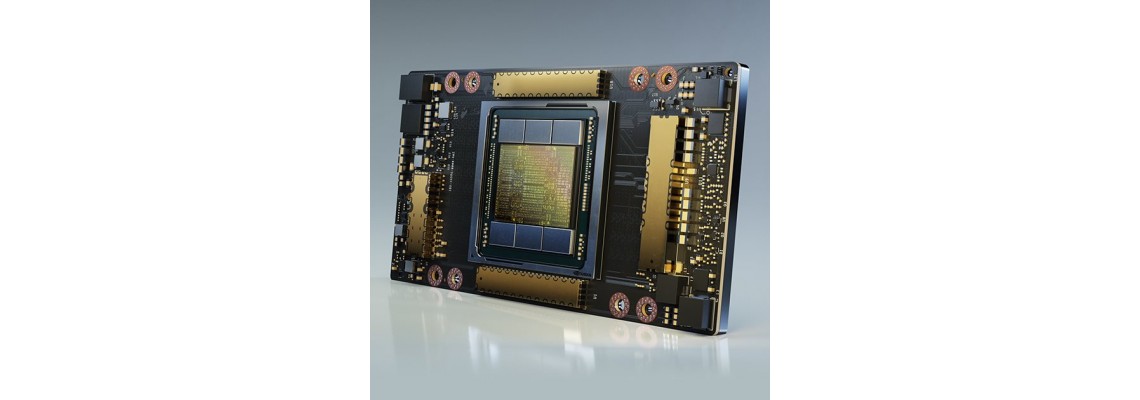

Nvidia's new architecture ampere is the great innovation of next-generation technology with enhanced RT Cores and Tensor Cores, a new streaming multiprocessor, and superfast memory. It powers up the current RTX 3000 series GPUs. Nvidia represents the major upgrade a massive leap in performance from the previous generation. The Ampere architecture is an important inflection point for Nvidia. Nvidia introduced the first 7nm GPU or 8nm for the consumer parts. Either way, the process allows for significantly more transistors packed into a smaller area than before.

Now we will look at Ampere's SM and its four SPs:

In here you will find every SP has sixteen 32-bit floating-point units (FP32), sixteen 32-bit integer units (INT32), sixteen 32-bit floating-point units (FP32), and eight 64-bit (FP64) units. The Ampere chip has 8,192 FP32, 512 Tensor Core units, 4,096 FP64 units, and INT32 units. This is one hell of a dense device. With only 108 of the SMs activated, has 6,912 FP32 and INT32 units, 3,456 FP64 units, and 432 Tensor Core units that can run workloads.

Important features

- 9.7 TFLOPS FP64 double-precision floating-point performance

- Up to 19.5 TFLOPS FP64 double-precision via Tensor Core FP64 instruction support

- 19.5 TFLOPS FP32 single-precision floating-point performance

- TensorFloat 32 (TF32) instructions improve performance without loss of accuracy

- Sparse matrix optimizations potentially double training and inference performance

- Speedups of 3x~20x for network training, with sparse TF32 TensorCores (vs Tesla V100)

- Speedups of 7x~20x for inference, with sparse INT8 TensorCores (vs Tesla V100)

- Tensor Cores support many instruction types: FP64, TF32, BF16, FP16, I8, I4, B1

- High-speed HBM2 Memory delivers 40GB or 80GB capacity at 1.6TB/s or 2TB/s throughput

- Multi-Instance GPU allows each A100 GPU to run seven separate/isolated applications

- 3rd-generation NVLink doubles transfer speeds between GPUs

- 4th-generation PCI-Express doubles transfer speeds between the system and each GPU

- Native ECC Memory detects and corrects memory errors without any capacity or performance overhead

- Larger and Faster L1 Cache and Shared Memory for improved performance

- Improved L2 Cache is twice as fast and nearly seven times as large as L2 on Tesla V100

- Compute Data Compression accelerates compressible data patterns, resulting in up to 4x faster DRAM bandwidth, up to 4x faster L2 read bandwidth, and up to 2x increase in L2 capacity.

Final Words

The Ampere architecture does offer magnificent improvements in Ray Tracing and DLSS and also the performance increment in Ampere is the best now. The other significant addition to Ampere is the PCIe Gen 4 support that offers much higher bandwidth and it can come quite useful in the future.